The Dark Side of AI: How AI is escalating cyber attacks?

- Rishit Malik

- Mar 12, 2024

- 6 min read

In recent years, significant advancements in Artificial Intelligence (AI) have had so many positive impacts on various aspects of life — with self-driving cars lowering car accident rates, virtual assistants (such as Siri and Alexa) helping people stay organized, and ChatGPT helping professionals and students (like me) work much more productively. Just a few years ago, I would never have expected AI to be capable of performing such extraordinary tasks! However, this got me thinking about how AI’s abilities could be misused and this led me to explore the darker side of AI — where its capabilities are exploited by millions of cybercriminals every day.

Cyber attacks are becoming increasingly more common. Just a few years ago, I had never heard of one, but now I get phishing emails and come across ‘fake’ websites that ask for my personal info (like passwords) nearly everyday. In 2017, there was just over $1 trillion USD worth of damages from cyber crimes. On the other hand, in 2022, there was a total of over $10 trillion USD worth of damages from cyber crimes — which is about a 10 times increase in damages in just five years. Clearly, cyber attacks are increasing at an alarmingly fast rate — but why is this the case?

Exploring the link between advancements in AI and the rise in Cybercrimes

AI stands as the fastest-growing technology globally. The graph below shows the exponential growth of the AI industry market size each year, with projections indicating a continued upward, exponential trajectory.

Graph 1: The Growth Trajectory of the AI Industry Market Size (2016–2025) in US Million Dollars

Cyber crimes are also on the rise; the graph below shows the reported losses due to cyber crimes over the past decade, displaying a distinct exponential rise between 2020 and 2022.

Graph 2: Reported losses due to cyber crimes in US dollars (2013 to 2022)

Looking at the two graphs, we can see a significant increase in AI growth from 2020 to 2022. Interestingly, this is also when cybercrimes go up the most. This highlights that there’s a clear connection between the rise of AI and the increase in cybercrimes.

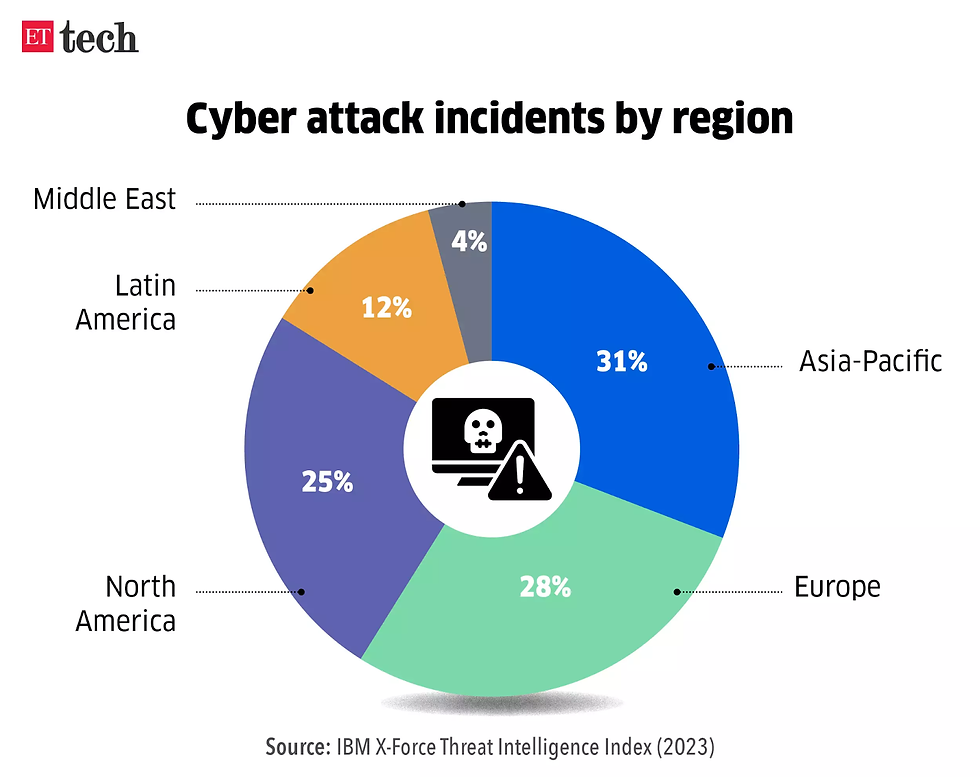

To further emphasize this correlation, let’s examine cyber attacks by region. The pie chart below indicates that the highest number of cyber attacks occurred in the Asia Pacific region (31%), followed by Europe (28%), with North America ranking third (25%).

Chart 1: Cyber attack incidents (%) by region

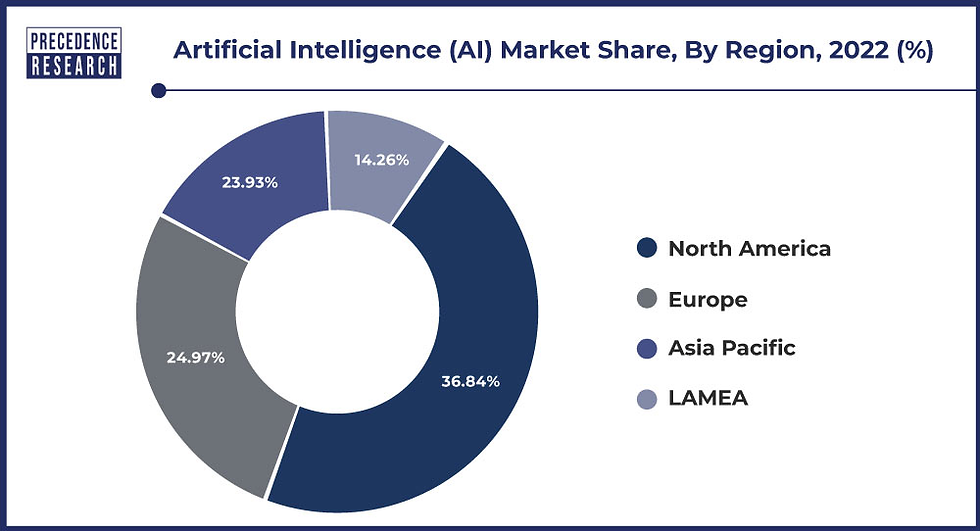

Similarly, considering the market share of the AI industry as shown in the pie chart below, Asia Pacific leads with the most significant share (36.84%), followed by Europe (24.97%), and North America trailing at the third spot (23.93%).

Chart 2: Artificial Intelligence (AI) market share (%) by region in 2022

Comparing both charts, it’s evident that the regions with the highest cybercrime rates — Asia Pacific, Europe, and North America — mirror the top three in AI market share. This shows that countries employing AI more extensively experience higher rates of cyber attacks, highlighting a direct association between increased AI usage and elevated cybercrime incidents.

From this we can conclude that the significant advancements in AI are responsible for the sudden increase in successful cyber attacks. By identifying this trend, it led me to delve into how exactly cybercriminals are using AI to cause more frequent and effective cyber attacks.

How are cyber criminals using AI?

Improved phishing attacks: Phishing attacks are the most common form of cyber attacks. They involve sending deceitful emails to users in an attempt to trick them into revealing their personal information. Traditionally, these emails were generated by humans and sent out to millions of randomly selected people. However, with the integration of AI, cyber criminals can tailor personalized emails that mimic the interests and style of the victims, increasing the likelihood of success. A recent study by cybersecurity experts at SoSafe found that 65% of individuals fell victim to divulging personal information through AI-generated emails, proving more effective and efficient than those crafted by humans

Data breaches: Nearly all websites and applications collect user data, like ours, for targeted advertising; storing data like our name, email address, address and interests. For instance, one of the most commonly used applications, Instagram stores our interests based on the content we view and like the most; you can find a screenshot below featuring the topics that Instagram believes I like the most.

If you use Instagram, you can check this information out about yourself by going to the settings and activity section on your account and then searching for ad preferences.

Although it is safe for users to use applications like Instagram, less secure websites and applications that store data about its users are very susceptible to data breaches. Using the power of AI, cyber criminals are developing more and more dangerous as well as frequent data breach attempts and many organizations are struggling to keep pace with the escalating cybersecurity threats. Recently, an AI assisted cyber attack on Taskrabbit (a platform that connects freelance handymen with clients) resulted in the compromise of data for 3.75 million users, which included sensitive information such as social security numbers and bank details.

Usually, data breaches, unlike the specific scenario with TaskRabbit, don’t result in cyber criminals obtaining highly sensitive data. Instead, they frequently gain access to less confidential information, such as a user’s emails and interests. The simplified spreadsheet below provides an example of the data that could be obtained by cyber criminals. Of course, this spreadsheet only displays a handful of users, and a real data breach would involve thousands or millions of records. Additionally, instead of just three interests, there would likely be more, but this serves as a simplified example.

Even with limited data, this information is sufficient for cybercriminals to target users from the data breach through phishing emails. With powerful AI models freely available, cybercriminals can input users’ data and instruct an AI model to generate personalized phishing emails for each user. I experimented with this by inputting the data set above into ChatGPT and asked it to write personalized emails for each user from the data set to inform them about a remote job opportunity and reply, if interested, sharing their confidential information such as their address and passport details. Below, you can find both my prompt and the output generated by ChatGPT for one of the individuals in the dataset

Image: Chat GPT Prompt

Image: Chat GPT Output

The email generated by ChatGPT seemed very believable and matched the user’s interests well. This highlights how cybercriminals can easily use AI models like ChatGPT to create convincing phishing emails using compromised user data. In the example I gave, the phishing email pretended to be a fake job offer, which is a common tactic. In 2022, the Federal Trade Commission reported that people lost nearly $68 million to scams involving fake job opportunities.

AI Driven Password cracking: Another way in which cyber criminals use AI is to crack user’s passwords more effectively. Traditionally, hackers would use brute force algorithms which test passwords sequentially until it found the right one. Recently, hackers have started to use AI models to proficiently identify and test the most common passwords, yielding a higher success rate. I looked at a study that compared the success rates of AI-driven and traditional brute-force attacks for various dictionary lengths (representing the number of words or terms used in the set of potential passwords): 50, 100, 250, 500, 750, and 1000 words. The study involved averaging results from 100 trials for each dictionary length. The results of the study can be seen in the graph below; it is clear that the AI based algorithm is significantly more effective than the traditional algorithm at cracking passwords.

Graph 3: Success rates of AI — Based Algorithm vs Traditional Algorithm in password cracking

Conclusion:AI has the potential to improve the world in many ways — but there is also a darker side to AI where its powers are being misused by millions of cyber criminals everyday. As AI continues to advance, it provides cyber criminals with the power to commit more frequent and dangerous crimes. That is why, as AI advances, it is important for more people to learn about cyber attacks to stay safe online.

References:

Oppermann, A. (2022, January 1). Artificial Intelligence Market Size | DataSeries. Medium. https://medium.com/dataseries/artificial-intelligence-market-size-a99e194c184a

Crane, C. (2023, October 31). A look at 30 key cyber crime statistics [2023 data update]. Hashed Out by the SSL StoreT.M. https://www.thesslstore.com/blog/cyber-crime-statistics/

ETtech. (2023, February 22). Asia-Pacific saw highest number of cyberattacks for second straight year in 2022: report. The Economic Times. https://economictimes.indiatimes.com/tech/technology/asia-pacific-saw-highest-number-of-cyberattacks-for-second-straight-year-in-2022-report/articleshow/98157503.cms?from=mdr

Artificial intelligence (AI) market size, growth, report by 2032. (2022). https://www.precedenceresearch.com/artificial-intelligence-market

Guembe, B., Azeta, A. A., Misra, S., Osamor, V. C., Sanz, L. F., & Pospelova, V. (2022). The emerging threat of AI-driven cyber attacks: a review. Applied Artificial Intelligence, 36(1). https://doi.org/10.1080/08839514.2022.2037254

SoSafe. (2023). One in five people click on AI-generated phishing emails, SoSafe data reveals — SoSafe. https://sosafe-awareness.com/company/press/one-in-five-people-click-on-ai-generated-phishing-emails-sosafe-data-reveals/

Howarth, J. (2023, November 29). The ultimate list of cyber attack stats (2024). Exploding Topics. https://explodingtopics.com/blog/cybersecurity-stats

Freeze, D. (2023, January 11). Cybercrime to cost the world 8 trillion annually in 2023. Cybercrime Magazine. https://cybersecurityventures.com/cybercrime-to-cost-the-world-8-trillion-annually-in-2023/

Kelly, J. (2023, June 1). Fake job scams are becoming more Common — Here’s how to protect yourself. Forbes. https://www.forbes.com/sites/jackkelly/2023/06/01/fake-job-scams-are-becoming-more-common-heres-how-to-protect-yourself/?sh=39b4d80118f6

Uy, P., & Uy, P. (2023, August 2). AI Cyber-Attacks: The growing threat to cybersecurity and countermeasures. IPV Network. https://ipvnetwork.com/ai-cyber-attacks-the-growing-threat-to-cybersecurity-and-countermeasures/

Fighting AI-Driven cybercrime requires AI-Powered data security — SPONSOR CONTENT FROM COMMVAULT. (2023, November 27). Harvard Business Review. https://hbr.org/sponsored/2023/10/fighting-ai-driven-cybercrime-requires-ai-powered-data-security

Comments